Let's start with a confession. In 2015, Electoral Calculus predicted a hung parliament, instead of a majority Conservative government. On the Friday after the election, I received an email from a member of the public who expressed strong disappointment in the error and went on to say "given the events of yesterday I would now have difficulty believing you if your poll indicated tomorrow was Saturday".

Such frustration was understandable. But to understand if it's going to happen again, we have to look carefully at exactly what went wrong and what did not. In 2015, the error was in the polls, which gave incorrect answers. The polls on average had mis-estimated the difference between Conservative and Labour by about 6pc. Electoral Calculus does not conduct its own polls, but relied on polling by the professional pollsters to feed into our model. The model itself worked quite well - if the polls had given the correct inputs, then the model would have been correct to within ten seats. But with bad inputs, it produced a bad output.

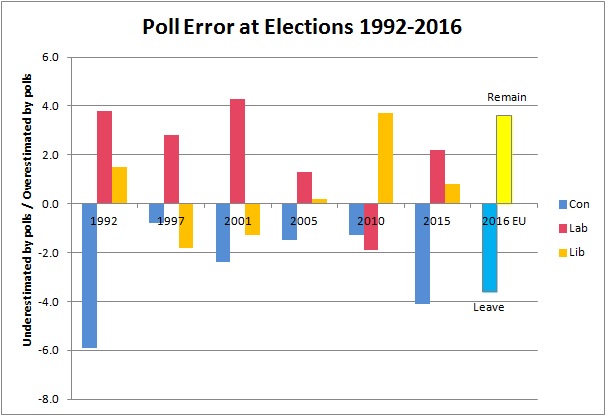

A year later, and a similar thing happened to the polling around the EU referendum. The final polls from the major pollsters mis-estimated the difference between Remain and Leave by about 7pc and wrongly indicated a Remain victory.

Later in 2016, US pollsters were perceived as not having given much chance to Donald Trump's election as President.

Taken together, these events give the polling industry a credibility crisis. Many people no longer trust them at all. But is that right? What went wrong in 2015 and 2016, and will it better this time?

The bar chart above shows the history of UK pollsters' errors. A bar above the zero line means that party was overestimated by the pollsters, and a bar below means the party was underestimated. The most striking fact is that the Conservatives have been underestimated at every election in the last twenty-five years. Conversely, Labour have been overestimated for all but one of those elections.

The worst pollster performance was in 1992 when Neil Kinnock was wrongly predicted to beat John Major. But the 2015 election and the EU referendum are both large errors by historical standards. This evidence confirms the public perception that the polls have been bad recently. As Andrew Hawkins, Chairman of pollster ComRes (one of the least wrong pollsters), confirmed "it was apparent that the tendency to overstate Labour and understate Conservative vote shares continued to be a problem".

Following the debacle, the British Polling Council launched an inquiry under Prof Patrick Sturgis. It identified two main technical problems which the pollsters had to tackle.

The first is the problem of pollster samples not being fairly representative. And the second is the problem of general election turnout.

Taken together the problem is that the pollsters hear the opinions of people who want to talk to pollsters and the general election measures the opinions of people who want to vote. These groups are not the same, and the first group is younger and more left-wing than the second.

The basic theory of sampling is that you pick people and random and ask them how they will vote, and thus get an even sample. The only error will come from natural chance ("sampling error") which leads to the famous +/-3pc theoretical poll error. But true random sampling is difficult to do, so pollsters usually start with a non-random sample. This is either an internet panel of volunteers or a set of people who agree to a phone interview. Because this sample is non-random, it needs to be adjusted to compensate. This is done using quotas to weight the sample, to make sure that there is the right mix of men and women, young and old, rich and poor, and so on.

But it is not foolproof. If, say, the only young people who agree to take part are politically committed (and thus likely to be left-wing), then you will over-sample young Labour voters and under-sample young Conservative voters.

Sampling is easy to get wrong, but it's hard to know when you're doing it right. Sampling error was the main cause of the 1992 polling disaster as well as 2015. The Sturgis panel recommended that pollsters "take measures to obtain more representative samples", but was light on the detail of how to do this. So pollsters have spent a lot of time thinking about this. Martin Boon, public opinion director at ICM, revealed that "most pollsters have spent more hours than are healthy on rebuilding polling models as a result of the 2015 miss. Many have introduced new techniques which directly contend with the historic tendency to inflate Labour shares of the vote".

The truth is that it is hard to know if this problem has been or can ever be fully fixed. Since the previous election was only two years ago and people can remember their previous vote more clearly, using that as a quota may help more at this election.

The turnout problem is also tricky. From one perspective, our entire democratic process is just a very large opinion poll, but one that is not fully representative. Not everyone chooses to vote, and returning officers do not chase up non-voters to get their opinion. So the official election result "undersamples" the people who don't vote.

Pollsters need to allow for this. They need to determine if their respondents are really going to vote or not. In practice this is difficult. You can ask people whether they are going to vote, but their replies are often over-optimistic. More people say they will vote than actually do vote. If younger voters are Labour-inclined but less likely to vote, then that skews the polls. Andrew Hawkins believes that accurate turnout forecasting is the key to getting it right. ComRes have applied differential turnout weights to different demographic groups, and calibrated the weights to people's actual behaviour and the 2015 election result.

Turnout was also a polling problem at the EU referendum. Turnout was surprisingly high, and also caused by Leave voters turning out, whereas many pundits thought that a high turnout was good for the Remain side.

It's hard to check if this problem has also been fixed, though ComRes' approach has been commendably scientific.

Unfortunately, we still don't really know for sure. But I do know two things: it's wrong to think the polls are meaningless rubbish; and it's foolish to trust them completely. The polls have meaning, but they will always have some error.

The pollsters hope it will be business as usual at this election, and the size of the Conservative lead could dwarf any poll error this year. But the acid test of their theoretical improvements will come on polling day. Until then, we can't know how right they are.